People ask me quite a lot about how I analyze performance issues. While I have some guidelines, there is no complete answer to this question. The actions I take depend on the situation, and there are many many different scenarios when talking about performance problems.

In this post I’ll describe an analysis I did about a year ago for a client. This started as the client complained about performance issues in one of the application flows. They managed to reproduce it on a test environment, so I could do anything I wanted to understand what is happening.

Part of the flow was inserting many rows into a table, and they claimed that this was the problem. I don’t remember the details (like if they used bulk insert or not, etc.), but they had performance information in their application logs and the time it took them to insert those rows really seemed too long.

Starting the Investigation

The first thing I did was to check the wait events of the session. The highest wait event was “db file sequential read” which is a random I/O access to tables or indexes.

Next, I thought of potential reasons. There are several reasons for slow insert operations resulting in waits on “db file sequential read” event, for example:

- Many indexes on the table (indexes require maintenance during DML operations)

- A table that has many foreign keys to other tables, so Oracle needs to check validity of the data (to make sure there is a parent for every child record)

- Triggers that do a lot of work for every insert

And so on.

I checked the table structure and found quite a few indexes, but no triggers or foreign keys. So my guess was index overhead. But is this a specific index or the number of indexes?

Investigating the Indexes

In order to prove my index theory (and I always try to prove my theories), I simply disabled all the indexes on the table and executed the test. If the root cause was triggers, foreign keys or something else, I would still see bad performance. But I didn’t. The process was really fast. So I assumed I was right and continued investigating the indexes.

Since checking the indexes one by one would have taken a lot of time, I checked their metadata. Most of them were about the same size, but one was significantly larger. This index became my prime suspect, as my logic says that large indexes have more blocks and therefore requires more blocks to be read for the maintenance. I disabled this index, but the process was still slow. I tried to disable some other indexes as well (quite randomly I have to admit), but the performance didn’t improve by much. I needed to find another way to find the problematic index.

Finding the Right Index

At this point I needed different tools than trial and error to find the index. I thought about the fact that I saw many “db file sequential read” waits, so I wanted to check on which index Oracle waits.

We have several ways to see the information:

- ASH – Active Session History records wait events every second, and it has the parameter information (for “db file sequential read” p1 is the file number and p2 is the block number so I can find the segment). You need to remember that it might not record all the information (as it has limited memory space) and it is only available for Enterprise Edition with diagnostic pack (which this specific client had). I ended up not using it.

- AWR – the AWR contains information about segment statistics including segments by I/O. The thing with AWR is that it is a database-wide tool. So anything else that runs on the database will be displayed in the report as well and it’s quite difficult to isolate your operations.

What I did at the end was quite simple, I traced the session. When using DBMS_MONITOR.SESSION_TRACE_ENABLE we can specify two parameters, one for displaying bind variables values and one for displaying wait events in the trace.

I used the following to trace the session including waits information:

exec dbms_monitor.session_trace_enable(<sid>,<serial#>,waits=>true);

When I looked at the trace I could see lines similar to this:

WAIT #3083967036: nam=’db file sequential read’ ela= 7 file#=4 block#=9953 blocks=1 obj#=14620 tim=1459551972939636

So all I had to do is to get the object number that appeared the most. I did that using the following linux command:

grep 'db file seq' db_ora_4615.trc | sed -e 's/.*obj#=//g' | sed -e 's/ .*//g' | sort -n

This command takes only the lines containing the ‘db file seq’ text (which is the event I’m looking for), deletes everything before and after the object number and sorts it so it will be easy to see the different objects.

The result was that one object appeared most of the time, and when I checked which object it was (by querying DBA_OBJECTS according to the OBJECT_ID column) I found my index.

Found it! But What is the Problem?

Finding the index was the first step. Now, I needed to prove that this index was causing the problem by disabling it (while all the others are enabled) and running the test. I was right! the performance was much better.

The next step was to understand why this index was causing the performance problem. I had no idea, so the only thing that came to my mind was to look at the relevant column in the insert statements and try to see if I understand something. So I did another trace, this time with “binds=>true” as well because the application used bind variables and no one knew the actual values. When I looked at the statements it all became clear.

The Conclusion

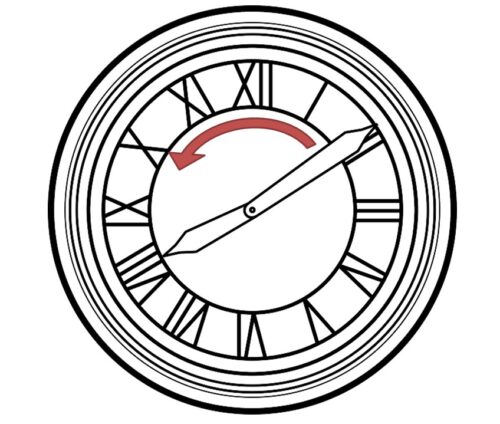

In the insert statements I saw that most of the column values were constant (or almost constant), but the specific column with the problematic index was totally random. The column was numeric and quite long (it was something like an internal generated number). When inserting a value to an index, Oracle needs to get the correct index block so it can insert the new column value into it. If everything is in memory, that’s great. But if not, Oracle will have to search the index structure and get the relevant blocks from the disk (during this time it waits on “db file sequential read” wait event). So if we insert many random values, Oracle will need to read blocks from all over the index, and that’s a lot of I/O if the index is large.

Solution

In this case it was an application generated number. They used random number for no reason, so they simply change that to be sequential numbers. That way, Oracle will need index blocks from the same area of the index (and each block will contain more that a single new value). This change made a huge difference in performance and the problem was solved.

Summary

As you can see, analyzing a problem is not always easy, not always straight forward and sometimes even requires trial and error. It is important sometimes to take a step back and simply think: “What can be the reason?”, “How do I prove it?”, “What’s the next step?” and “what tools might help me?”.

I hope you enjoyed, I’ll try to post some more analysis example like that in the future.

Execellent research.

However, values for p1,p2,p3 can be derived from v$session also if the session is active

Thanks, glad you enjoyed it.

You are basically right, you can see the values of p1,p2 and p3 in v$session. However, this is only a “real time” info, once the wait is over, the data is gone. It is nearly impossible to follow all the waits and identify the relevant segment. For that you will need wait event history with the parameters values, and this can be found in a trace file or ASH.

I admit I have taken to saving the V$SESSION stats to my own ASH because it does lack some data.

Michael, the data in V$SESSION can change so quickly that you won’t always be able to catch what you are looking for. It happened to me before and in that case I needed “buffer busy wait” and got it eventually from segment statistics.

Excellent article!!

Amitzil,

This was really excellent. You would be surprised at how few posts of this kind there are! Anyone can learn the wait events with enough effort, but to understand your reasoning and how you attacked the problem is invaluable!

I really enjoyed it and would love to read more.

Thank you!

Thanks Michael, I really appreciate the comments. It motivates me to write more.

Liron